This is the 3rd (and final) part of a tutorial about the Kalman Filter for state estimation. Why do we care about state estimation? State Estimation uses math to do what the brain does automatically: combine noisy sensors into a “best guess” estimate. This lets us observe all kinds of systems that can be defined mathematically.

Part 1 discusses the high-level background on State Estimation and how to create system and measurement models.

Part 2 introduces the Extended Kalman Filter equations needed in order to estimate the state of a robot lawnmower.

Finally, this tutorial discusses implementing the EKF for a differential drive mobile robot (ie., a robot lawnmower!), including pseudocode. Our EKF includes: 1) System Update and 2) GPS Measurement Update. Quick disclaimer on my pseudocode… I wrote this using MATLAB syntax. But I havent tested it, so take it as a “guide.”

Implementing System Update

We’re about to implement the Extended Kalman Filter system update for a mobile robot. Here are the relevant EKF system update equations. For a conceptual discussion on the update equations, refer to KF Tutotial Part 1. As a reminder, represents the best estimate of the state vector while

represents the covariance (or uncertainty) in that estimate.

And remember that our differential drive mobile robot uses the state: . The differential-drive transition equation as we have derived it,

in the system update, is expressed using the following equations:

Also, the matrix is the Jacobian of the system update function:

Now lets write some code for the system update, following Matlab notation.

function [state_pre, P_pre] = system_update(state, P, Qk, dt)

% state = [x, y, tht, v, omega]'

% Update State: x_pre = f(x,u), where x=state and u=0

state_pre = [state(1) + state(4)*dt*cos(state(3));

state(2) + state(4)*dt*sin(state(3));

state(3) + state(5)*dt;

state(4);

state(5);

% Calculate Fk

Fk = [ 1 0 -state(4)*dt*sin(state(3)) dt*cos(state(3)) 0;

0 1 state(4)*dt*cos(state(3)) dt*sin(state(3)) 0;

0 0 1 0 dt;

0 0 0 1 0;

0 0 0 0 1];

% Update Covariance: P_pre = F*P*F' + Qk

P_pre = Fk*P*Fk' + Qk

It’s also worth mentioning that is the “system noise” covariance matrix, which is more or less a tuning parameter. The

diagonal elements represent state variance during a single system update.

Implementing GPS Measurement Update

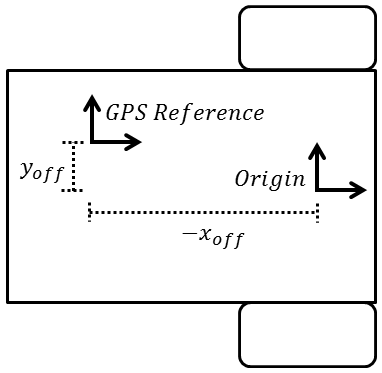

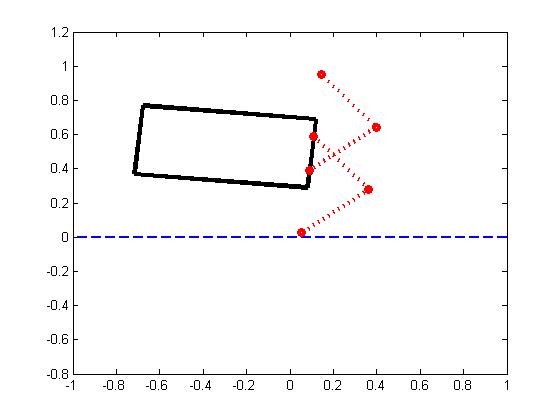

The GPS measurement with a lever arm offset can be expressed as:

Which is what we will use for the GPS measurement update. Here are the EKF Measurement Update equations:

Where , and

is the covariance matrix for the GPS measurement. In this case,

.

Lets do the code for the measurement update!

function [state_post, P_post] = meas_update_gps(state, P, z, Rk, dt, off)

% state = [x, y, theta, v, omega]'

% off = [x_off, y_off]' <- this is the lever arm offset

% z = [x_gps, y_gps] <- this is the actual GPS measurement reading

% Calculate Hk

Hk = [ 1 0 -off(1)*sin(state(3))-off(2)*cos(state(3)) 0 0;

0 1 off(1)*cos(state(3))-off(2)*sin(state(3)) 0 0];

% Find the expected measurement: h(state)

gps_est = [state(1) + off(1)*cos(state(3)) - off(2)*sin(state(3));

state(2) + off(1)*sin(state(3)) + off(2)*cos(state(3))];

% Find Kalman Gain

K = P*H'*(H*P*H'+Rk)^-1;

% Find new state:

state_post = state + K*(z-gps_est);

% Find new covariance:

P_post = P - K*Hk*P

Putting it All Together

Now lets pull our two functions together to come up with our Extended Kalman Filter.

function [state, P] = extended_kalman_filter(state, P, z_gps)

% state = [x, y, theta, v, omega]'

% P = covariance matrix

% z_gps = [x_gps, y_gps]

% Goal: Use the new GPS measurement to update the EKF state and covariance

% Set constants (Or pass into the function)

dt = 0.1; % 10 Hz

Q = diag([0.01, 0.01, 0.001, 0.3, 0.3]);

Qk = Qk(dt); % <- System (Process) Noise

R_gps = [0.1^2 0;

0 0.1^2];

Rk_gps = R_gps*dt; % <- Measurement Noise

off = [0.25; 0]; % <- GPS lever arm offset. x_off = 0.25, y_off = 0

% Extended Kalman Filter:

[state_pre, P_pre] = system_update(state, P, Qk, dt);

[state, P] = meas_update_gps(state_pre, P_pre, z, Rk, Rk, dt, off);

We now have a functional extended kalman filter that can be placed inside a loop to estimate the robot state using GPS measurements.

A neat fact: All five states will be observable! It’s true- using GPS measurements plus knowledge of the robot system, it is possible to extract a best guess for position, heading, velocity, and angular velocity of the robot. This same approach can be applied to any other system that can be modeled with differentiable equations… simply replace the EKF equations with the equations for the new system, and you are in business (also make sure your system is Observable- but that’s a discussion for another day).

A couple easy ways to improve our mobile robot Extended Kalman Filter:

- Add new measurements. Everyone wants more measurements! Another typical mobile robot measurement is “wheel odometry” which essentially observes the wheel rotation using incremental sensors attached to the robot. This provides a huge improvement because you can get odometry readings at, say 50 Hz whereas GPS is typically slower.

- Improve the system. Note that the measurement system always uses an old heading to propagate the state. It has been shown that a more precise approach is to use the angular velocity to propagate this heading to the “midpoint” of each measurement. This, naturally, results in a far more complicated system update- But it has significantly better results (maybe an order of magnitude better, depending on how large the timestep dt is).

And of course, the same Extended Kalman Filter procedure applies to many other situations (surprisingly, autonomous robot lawnmowers are not the only use for the EKF). In fact, EKFs are a go-to approach for most state estimation problems in existence today… How do rockets determine their orientation while trying to land? I would take any bet the rocket uses an EKF or EKF-related algorithm. Enjoy the newfound power of state estimation! Thanks for reading.